Multiple Logistic Regression

Types of statistical models

| response | predictor(s) | model |

|---|---|---|

| quantitative | one quantitative | simple linear regression |

| quantitative | two or more (of either kind) | multiple linear regression |

| binary | one (of either kind) | simple logistic regression |

| binary | two or more (of either kind) | multiple logistic regression |

Types of statistical models

| response | predictor(s) | model |

|---|---|---|

| quantitative | one quantitative | simple linear regression |

| quantitative | two or more (of either kind) | multiple linear regression |

| binary | one (of either kind) | simple logistic regression |

| binary | two or more (of either kind) | multiple logistic regression |

Types of statistical models

| variables | predictor | ordinary regression | logistic regression |

|---|---|---|---|

| one: x | β0+β1x | Response y | logit(π)=log(π1−π) |

| several: x1,x2,…,xk | β0+β1x1+⋯+βkxk | Response y | logit(π)=log(π1−π) |

Multiple logistic regression

- ✌️ forms

| Form | Model |

|---|---|

| Logit form | log(π1−π)=β0+β1x1+β2x2+…βkxk |

| Probability form | π=eβ0+β1x1+β2x2+…βkxk1+eβ0+β1x1+β2x2+…βkxk |

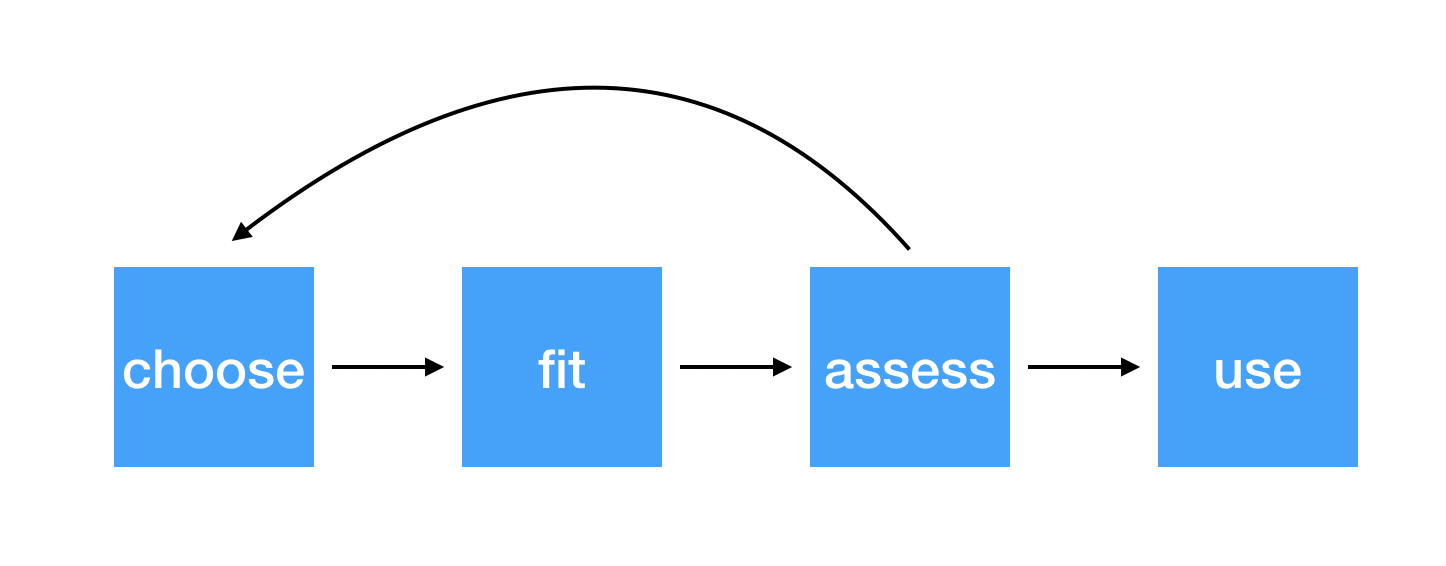

Steps for modeling

Fit

data(MedGPA)glm(Acceptance ~ MCAT + GPA, data = MedGPA, family = "binomial") %>% tidy(conf.int = TRUE)## # A tibble: 3 x 7## term estimate std.error statistic p.value conf.low conf.high## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>## 1 (Intercept) -22.4 6.45 -3.47 0.000527 -36.9 -11.2 ## 2 MCAT 0.165 0.103 1.59 0.111 -0.0260 0.383## 3 GPA 4.68 1.64 2.85 0.00439 1.74 8.27Fit

What does this do?

glm(Acceptance ~ MCAT + GPA, data = MedGPA, family = "binomial") %>% tidy(conf.int = TRUE)## # A tibble: 3 x 7## term estimate std.error statistic p.value conf.low conf.high## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>## 1 (Intercept) -22.4 6.45 -3.47 0.000527 -36.9 -11.2 ## 2 MCAT 0.165 0.103 1.59 0.111 -0.0260 0.383## 3 GPA 4.68 1.64 2.85 0.00439 1.74 8.27Fit

What does this do?

glm(Acceptance ~ MCAT + GPA, data = MedGPA, family = "binomial") %>% tidy(conf.int = TRUE)## # A tibble: 3 x 7## term estimate std.error statistic p.value conf.low conf.high## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>## 1 (Intercept) -22.4 6.45 -3.47 0.000527 -36.9 -11.2 ## 2 MCAT 0.165 0.103 1.59 0.111 -0.0260 0.383## 3 GPA 4.68 1.64 2.85 0.00439 1.74 8.27Fit

What does this do?

glm(Acceptance ~ MCAT + GPA, data = MedGPA, family = "binomial") %>% tidy(conf.int = TRUE) %>% kable()| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -22.373 | 6.454 | -3.47 | 0.001 | -36.894 | -11.235 |

| MCAT | 0.165 | 0.103 | 1.59 | 0.111 | -0.026 | 0.383 |

| GPA | 4.676 | 1.642 | 2.85 | 0.004 | 1.739 | 8.272 |

Assess

What are the assumptions of multiple logistic regression?

Assess

What are the assumptions of multiple logistic regression?

- Linearity

- Independence

- Randomness

Assess

How do you determine whether the conditions are met?

- Linearity

- Independence

- Randomness

Assess

How do you determine whether the conditions are met?

- Linearity: empirical logit plots

- Independence: look at the data generation process

- Randomness: look at the data generation process (does the spinner model make sense?)

Assess

If I have two nested models, how do you think I can determine if the full model is significantly better than the reduced?

Assess

If I have two nested models, how do you think I can determine if the full model is significantly better than the reduced?

- We can compare values of −2log(L) (deviance) between the two models

Assess

If I have two nested models, how do you think I can determine if the full model is significantly better than the reduced?

- We can compare values of −2log(L) (deviance) between the two models

- Calculate the "drop in deviance" the difference between (−2log(Lreduced))−(−2log(Lfull))

Assess

If I have two nested models, how do you think I can determine if the full model is significantly better than the reduced?

- We can compare values of −2log(L) (deviance) between the two models

- Calculate the "drop in deviance" the difference between (−2log(Lreduced))−(−2log(Lfull))

- This is a "likelihood ratio test"

Assess

If I have two nested models, how do you think I can determine if the full model is significantly better than the reduced?

- We can compare values of −2log(L) (deviance) between the two models

- Calculate the "drop in deviance" the difference between (−2log(Lreduced))−(−2log(Lfull))

- This is a "likelihood ratio test"

- This is χ2 distributed with p degrees of freedom

Assess

If I have two nested models, how do you think I can determine if the full model is significantly better than the reduced?

- We can compare values of −2log(L) (deviance) between the two models

- Calculate the "drop in deviance" the difference between (−2log(Lreduced))−(−2log(Lfull))

- This is a "likelihood ratio test"

- This is χ2 distributed with p degrees of freedom

- p is the difference in number of predictors between the full and reduced models

Assess

- We want to compare a model with GPA and MCAT to one with only GPA

glm(Acceptance ~ GPA, data = MedGPA, family = binomial) %>% glance()## # A tibble: 1 x 7## null.deviance df.null logLik AIC BIC deviance df.residual## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>## 1 75.8 54 -28.4 60.8 64.9 56.8 53glm(Acceptance ~ GPA + MCAT, data = MedGPA, family = binomial) %>% glance()## # A tibble: 1 x 7## null.deviance df.null logLik AIC BIC deviance df.residual## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>## 1 75.8 54 -27.0 60.0 66.0 54.0 5256.83901 - 54.01419## [1] 2.82Assess

- We want to compare a model with GPA and MCAT to one with only GPA

glm(Acceptance ~ GPA, data = MedGPA, family = binomial) %>% glance()## # A tibble: 1 x 7## null.deviance df.null logLik AIC BIC deviance df.residual## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>## 1 75.8 54 -28.4 60.8 64.9 56.8 53glm(Acceptance ~ GPA + MCAT, data = MedGPA, family = binomial) %>% glance()## # A tibble: 1 x 7## null.deviance df.null logLik AIC BIC deviance df.residual## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>## 1 75.8 54 -27.0 60.0 66.0 54.0 52pchisq(56.83901 - 54.01419 , df = 1, lower.tail = FALSE)## [1] 0.0928Assess

- We want to compare a model with GPA, MCAT, and number of applications to one with only GPA

glm(Acceptance ~ GPA, data = MedGPA, family = "binomial") %>% glance()## # A tibble: 1 x 7## null.deviance df.null logLik AIC BIC deviance df.residual## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>## 1 75.8 54 -28.4 60.8 64.9 56.8 53glm(Acceptance ~ GPA + MCAT + Apps, data = MedGPA, family = "binomial") %>% glance()## # A tibble: 1 x 7## null.deviance df.null logLik AIC BIC deviance df.residual## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int>## 1 75.8 54 -26.8 61.7 69.7 53.7 51pchisq(56.83901 - 53.68239, df = 2, lower.tail = FALSE)## [1] 0.206Use

- We can calculate confidence intervals for the β coefficients: ^β±z∗×SE^β

- To determine whether individual explanatory variables are statistically significant, we can calculate p-values based on the z-statistic of the β coefficients (using the normal distribution)

Use

How do you interpret these β coefficients?

glm(Acceptance ~ MCAT + GPA, data = MedGPA, family = "binomial") %>% tidy(conf.int = TRUE)## # A tibble: 3 x 7## term estimate std.error statistic p.value conf.low conf.high## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>## 1 (Intercept) -22.4 6.45 -3.47 0.000527 -36.9 -11.2 ## 2 MCAT 0.165 0.103 1.59 0.111 -0.0260 0.383## 3 GPA 4.68 1.64 2.85 0.00439 1.74 8.27^β interpretation in multiple logistic regression

The coefficient for x is ^β (95% CI: LB^β,UB^β). A one-unit increase in x yields a ^β expected change in the log odds of y, holding all other variables constant.

e^β interpretation in multiple logistic regression

The odds ratio for x is e^β (95% CI: eLB^β,eUB^β). A one-unit increase in x yields a e^β-fold expected change in the odds of y, holding all other variables constant.

Summary

| Ordinary regression | Logistic regression | ||

|---|---|---|---|

| test or interval for β | t=^βSE^β | z=^βSE^β | |

| t-distribution | z-distribution | ||

| test for nested models | F=ΔSSModel/pSSEfull/(n−k−1) | G = Δ(−2logL) | |

| F-distribution | χ2-distribution |